cRM DATA AUTOMATION

Background

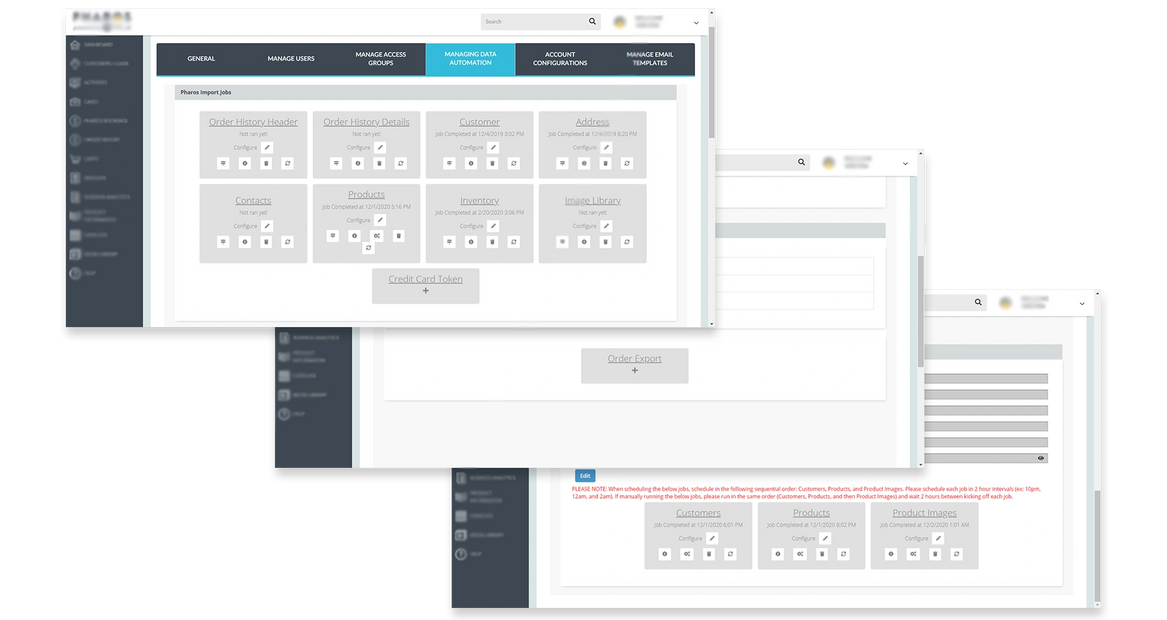

We launched the CRM desktop product in early 2019, and part of the MVP was a basic data automation page which iframed in a data interface that was originally created for internal purposes only. With the adoption of the tool by high caliber customers, we knew we needed to redesign the Data Automation feature as more and more users needed to access these pages, to setup import and export jobs for their Product, Customer, and Order data coming in and leaving their CRM account.

As part of the larger effort to give our users the reigns to setup and control various parts of the tool, without the need of developer intervention or hand-holding by the Customer Success & Onboarding teams, I was responsible for researching the industry standard, understanding the users who would be using these pages, and designing the Data Automation experience within the confines of our limited resources and timeline.

The data flow into and out of CRM is a core pillar of the product, and it's interaction with the rest of the product suite, as this data is key to running a functioning website and mobile sales app and displaying proper information in the CRM account. Therefore, it was imperative that the Data Automation feature was simplified, focused, and easier to comprehend by everyday users with basic knowledge of key data files.

The Problem

Initially, the project was scoped to use the existing functionality we had in our original internal tool, just given a bit of a facelift. After diving deeper into the various areas of the Data Automation tool, I found the existing design and user flows were entirely overwhelming—providing both unnecessary and under-explained information, as well as lacking what I believed to be key functionality. More specifically, the design pattern used to indicate the various requirements was unclear, risking users setting up data job incorrectly, or not at all, and risking incorrect or inadequate data in not only the CRM account, but all other products that relied on the data set.

Research & Exploration

I first reached out to our Customer Success team to understand if support tickets correlated with confusion around data jobs in the CRM product or incorrect/incomplete data sets in other products relying on the data from CRM. We found that a large number or our support tickets revolved around the request from our users to have members of our development and onboarding teams to create import and export jobs for them. In addition there were a handful of tickets related to connecting FTPs and deleting old FTPs that we added in error. And the third common theme was the need to be given the data specs for each of the key data files, as many users misplaced the spec they were given during their onboarding period, or questioned what data points they needed to provide.

Working with the Product Manager, we identified a group of 5 internal users to see how they currently went about setting up the various import and export jobs from the CRM product using the current Data Automation tool. The users were asked to screenshare and explain their steps, and speak openly about where they found information, flows, or patterns to be confusing. We asked each for their list of most common mistakes they made using the tool as well as a list of "nice-to-haves" features that would make their lives easier.

I confirmed our Data Automation relied too heavily on the assumption that users would know what data was required, would know how to map that data, and had no ability to add a remove connected FTPs without a developer's assistance.

Ideation

Aside from the lack of direction to the user surrounding any parts of the tool, part of what made the existing design pattern confusing was its reliance on icons for various functions, yet those icons didn't accurately represent the functions. They were too vague for such important and complex features. Working with the PM and focus group on copy, I added copy in the redesign so that users would have a clear understanding of what each feature did, and what steps they should take on the pages themselves, simply put: we gave the users direction that they desperately needed.

I broke up the various actions/features into their own sections in a tab-like design, following the design pattern used in other areas of the tool. This helped to better organize the various functions, so the user could easily navigate to where they needed to go. For example, I broke out the FTP feature, which was originally only accessible when initially setting up a data feed (basically making it inaccessible after the feed has been setup), and move it into its own section and added functionality like deleting FTPs, checking connectivity, and verifying credentials.

Users would be prompted to navigate through various steps in the data feed setup and mapping process. To combat user confusion, it was important design it as a wizard that the user goes through with a step by step action, where it was easy to click back or next to review the steps. I also added some requested functionality from our users where the fields would auto-map based on naming, and when the user does a manual map using the dropdowns, they would be able to easily identify those fields that were already mapped which greatly improved the usability.

I used color to indicate the status of the different feeds that had already been setup, as well as adding links that direct users to downloadable data specs. The design solution allowed us to develop one pattern that created consistency for users across the various areas of the tool, rather than have a completely different design for this one feature that was unlike the rest of the sections in the CRM product.

Initially, I explored showing the user feedback regarding any errors that might occur during a data feed's schedule run, or after an on-demand run. After working closely with the development team to understand error reporting constraints, I found it made more sense to only show a detailed log of issues when there were errors during a feed's execution, and limit to a single success message when the feed had completed without errors. This was because users do not need to inundated with a log of everything that was processed if it was all done successfully, they only needed a log to refer to if there were issues, and in that log they only needed the reported issues to be included, not any of the successes. This was best to avoid overwhelming the user by giving them more information than they needed, and only focus on the actionable items.

Results & Reflection

One lesson that proves itself to always be useful is understanding the technology behind your products. This information was definitely helpful in making UX decisions, but also built a stronger working relationship with many members of the development team. This understanding of our data engine proved to be helpful in designs later on and gave perspective on how much is working behind the scenes every time a user interacts with the platform.

It was really inspiring to utilize feedback from the test group we interviewed before the launch, and who we interacted with after the initial wireframes were created. Their voice was key to driving the different decisions we made, as well as bringing some "wants" to the table that we weren't aware of when the project kicked off. To get a shout out from the Customer Service team after the presentation of the high-fidelity mockups, and subsequent launch, really drove home how much of a difference this project made in the day-to-day of many of our own internal team members, in addition to our external users.

Since this project, we have started discussions around adding functionality to support API feeds, as we begin to move away from the file-based data exchange. As a company, this data integration will be key to powering the suite, and while we will need to support our existing users who refuse to move towards APIs, we must lay the groundwork for a more sustainable and scale-able data exchange process.

Want to learn more? Drop me an line.

dmart_09@yahoo.com

Copyright © 2021 Devon Martin - All Rights Reserved.